How to Write Review-Friendly Articles

(Updated: )Reading time: 3 minutes

Scientific and technical writing is hard and requires deliberate practice. I already blogged about some of the challenges. Here is some more advice.

General Tips for Technical Writing

In a previous post, I highlighted some common mistakes and suggested improvements. Let’s add some links to additional resources that I keep on finding useful:

- Writing for Busy People, a rambling by G. Hohpe, is short but deep and dense. Gregor points out that first impressions count, that storytelling can help to get different target audiences engaged, and that authors can guide their readers. For instance, each list should have an order, and all its items should use the same grammatical constructs (same for sections, tables, etc.).1 Go to the rambling to learn more!2

- Harvard professor Steven Pinker shares a video recording of his lecture Linguistics, Style and Writing in the 21st Century on YouTube. Highly recommended!

- Mastering Scientific and Medical Writing — A Self-Help Guide by S. Rogers, Springer-Verlag. I attended a course by the author while at IBM Zurich Research Lab, and apply many of the course topics to this day (well, hopefully I do).

- Texten für die Technik, A. Baumert and A. Verhein-Jarren, Springer-Verlag (PDF). Annette is a colleague of mine, teaching communication courses. I often recommend this German book to students.

So if you do not trust the technical writing advice from a know-it-all software architect, you can find out what Annette and Steven have to say about classic prose, active vs. passive voice and the curse of knowledge 😉

Review-Friendly Writing

Let’s assume we have mastered the craft of technical writing at least to some extent. Once your text passes the “readability” check, you want its content and messages to convince. How to get there? Practice, practice, practice. Iterate. Iterate again. Seek for help from a coach or mentor.

These following general quality dimensions/criteria apply for research papers:

- Relevance of paper content and research contribution, in general and w.r.t. the call for submissions.

- Quality of content (research problem and contribution, presented results): technical soundness, depth, novelty; discussion (not just listing) of related work.

- Research approach and method including validation and critical discussion (for instance, threats to validity in empirical software engineering of results).

- Editorial quality and maturity: see above for a few tips and tricks, and pointers to more.

The various software engineering conferences and journals may define their own criteria, often somewhat specific to the respective research area and topic (software architecture, validation and verification, evolution and maintenance and so forth). It is worth to check out the respective calls for submissions and websites.3

Here’s a few more tips:

- Read a few papers that got accepted recently at the venue you are heading for. Do not focus on their content but their organization and writing style; do not blindly copy that style but look for common patterns and try to blend it with your writing preferences and readers’ needs.

- Review your own material in different modes. In one iteration, you only read table of content, section headings and figure captions. After that, you only read abstract, introduction and conclusions (to simulate what some busy readers do). Next, you focus on spelling and punctuation only. And so on, one criterion at a time.4

- When writing, switch perspectives from time to time. Let one section rest a bit to work on another one (or take a break), and you will approach it as your own tester (a new member of the target audience, that is) next time you read it (for instance, the next morning).

Experience reports from practitioners in industry tracks and articles in magazines have different requirements; novelty/contribution and coverage/analysis of related work do not matter as much here; business context, technical requirements, solutions to them and lessons learned along the way go up in the priority list.

For experience reports, it is a little easier to generalize the writing advice than for software research papers. At ECSA 2020, for instance, we used the following criteria (and scores) to judge submissions to the Industry Track:

- Practical relevance and originality (scores: low, medium, high)

- Technical soundness (scores: low, medium, high)

- Maturity of experience (shallow, medium, deep)

- Reflection and discussion (insufficient, some, thorough)

- Editorial quality (low, medium, high)

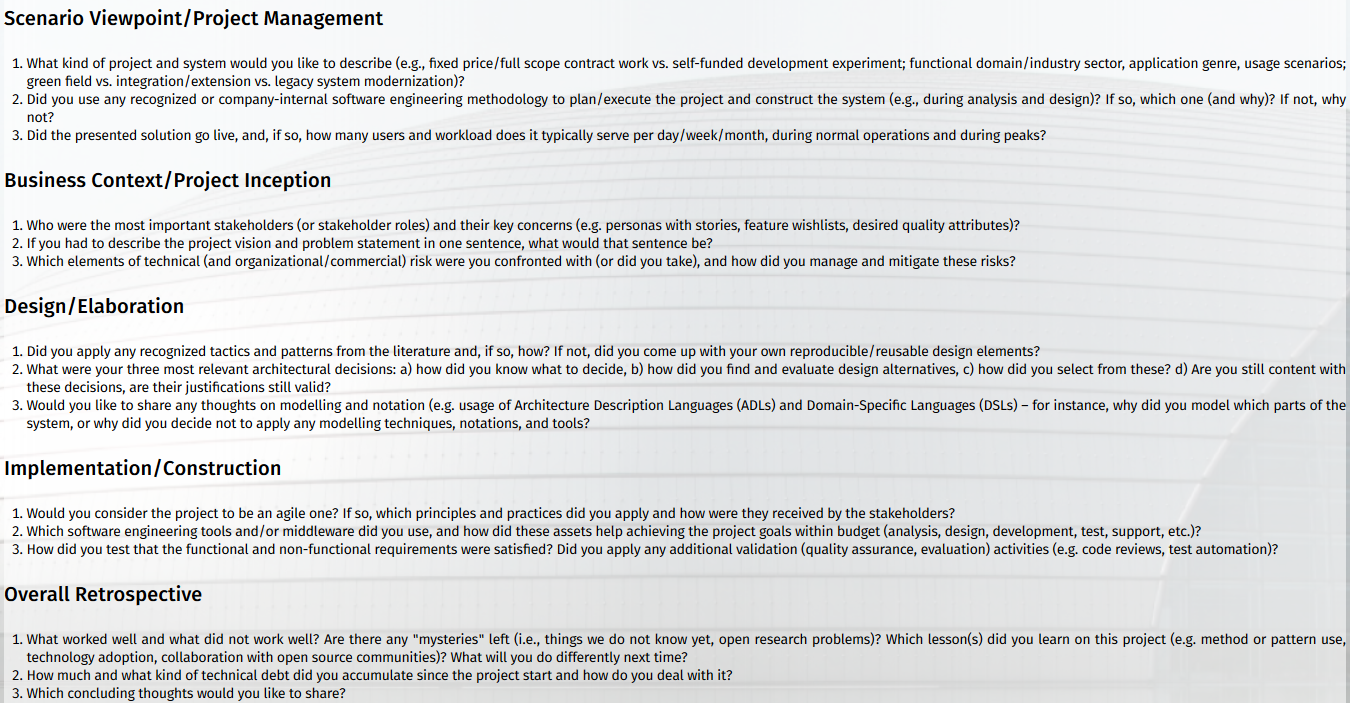

Let’s get a bit more precise and concrete. The questions and criteria that Cesare Pautasso and I asked in our call for submissions to IEEE Software Insights might be somewhat software- and design-centric.5 But they give you an idea:

For a better resolution and a list of all articles published so far, see our Insights landing page.

From Writing to Reviewing

To make the reviewers’ job as easy as possible (which is a good idea), help them answer these questions that elaborate on, and enquire about, the above criteria:

- Does the paper type get clear and match with the Call for Papers (CFP), e.g., full research paper vs. emerging/short vs. experience report?

- Is the context established (domain/area, previous work)?

- Is the research problem motivated and articulated well? Is it relevant w.r.t. CFP and in general, is it open or partially open (so not solved yet)?

- Is the research contribution convincing? Does it solve the problem, is it new, does it advance the state of the art sufficiently (over existing work from same authors and from others in community, both academia and industry)? Is the contribution comprehensive and mature enough for the publication type (workshop, conference, journal)? Does it work (in theory, in practice)? Does the paper contain enough information to reproduce the research results?

- Does the paper contain a validation section? Which validation method/form was used (e.g. implementation, case study, action research, survey), and is the chosen method adequate for the presented contribution type? How deep do the validation and its presentation in the paper go (e.g., application to fictitious example vs. real-world scenario/data)? Are the validation results convincing (or at least good enough to stimulate interesting discussions at the event)? Can the results be replicated? Are the principles for FAIR and Open Computer Science Research Software met?

- Is related work discussed (so not just listed, but analyzed and compared with contribution)? Are the references balanced and diverse enough (in terms of age, communities, authors, publication types)?

- Is the editorial quality sufficient: mix of/balance between text and figures, use of notation(s), number of typos and language flaws (wording, grammar), flow? Are the figures readable (online, in print)?

If you decide to become a reviewer of research papers yourself, have a look at these sources of information on how to peer review on conference level and journal level. And you can find the shorthand markup notation that I use when reviewing (and taking meeting minutes) in another post on this blog.

Wrap Up: Writing and Reviewing Go Hand in Hand

This post and the previous one list criteria and give suggestions how to improve editorial quality. It is more difficult to give general advice regarding the content of research papers. Hence, I abstained from that for the most part, but gave you some questions that reviewers will ask (which unveil some of the writing and reviewing tactics that I use myself).

Now it is time to switch from reading to writing and apply the above tips!

Update (January 2021): Ipek Ozkaya proposed a “Reviewers’ Oath” in her Jan/Feb 2021 guest editorial of IEEE Software Protecting the Health and Longevity of the Peer-Review Process, asking that reviews should be concrete, actionable, relevant, and timely; submitters of papers should also review themselves, and recommend members of their networks to grow and sustain the community.

– Olaf (a.k.a. ZIO, socadk) Contact me if you have feedback or input.

The next post in my mini-series on technical writing is “Shorthand and Markup for Speedy Note-Taking”.

-

Some examples from my analysis and design practice: use cases have verbs as names, so have business process activities. Milestones use the past participle (“requirements elicited”, “review completed”). And so on. ↩

-

please make sure you read the entire rambling, all the way down to the acknowledgment 😉 ↩

-

I would not assume that reviewers to this every time they serve on a PC or assess a journal submission. ↩

-

This is the single responsibility principle applied to review iterations! ↩

-

Note that we no longer accepts submissions. See this post. ↩